This article is part of the Learning Docker series.

- 1 - A Beginner’s Guide to Docker (This Article)

- 2 - Understanding Docker Layers

Note: This blog was originally posted at https://medium.com/kishanreddy1810/a-beginners-guide-to-docker-4915d691b972

You’re one of the cool kids. You have heard of Docker and know that you can package your code in a container and run it in an isolated environment. You’ve heard a lot of companies are using it to build, test and deploy their applications from development to production. But you haven’t had the chance to work with Docker.

I wonder, would you still be one of the cool kids if word of this got out?

Don’t worry. I was there myself. I’m no expert but I got you covered. Let’s get ready to dive into Docker.

Next steps:

- Why should I use Docker?

- Installing Docker

- How will I package all my dependencies?

- How do I run this packaged image?

- How do we configure a docker image-based application?

1. Why should I use Docker?

Every application has its own set of dependencies. Assuming I want a fully working instance of my application, the traditional way of doing things would be that I list all the dependencies using a script or using requirements.txt in case of a Python app.

Let’s look at some of the problems that can occur:

- My requirements install successfully on my machine but not my colleague’s. Ah yes, the infamous

”Works on my machine!”conundrum because of OS / Kernel /System level differences. - The changes I made to my app worked flawlessly on my machine but then failed in a production environment due to the above-mentioned differences. Can you imagine the horror?!

- I wrongly configured some of my dependencies. How fast can I tear down and rebuild from scratch?

Looking at the above problems, the theme seems like there is a problem with environment parity as mentioned by The Twelve-Factor App as seen at https://12factor.net/. The idea here is that if there is parity between all environments, can’t we build the application with minimal default configuration once and run everywhere with minor overriding changes if required in environment/configuration?

Here are some questions:

- Can we package our application such that it can run anywhere with its dependencies?

- Can we solve this by making sure the configuration/packaging stays as consistent as possible across all environments?

- Can we ensure that the application runtime stays almost the same for all environments?

- Can we build the application once and then run anywhere?

Docker to the Rescue!

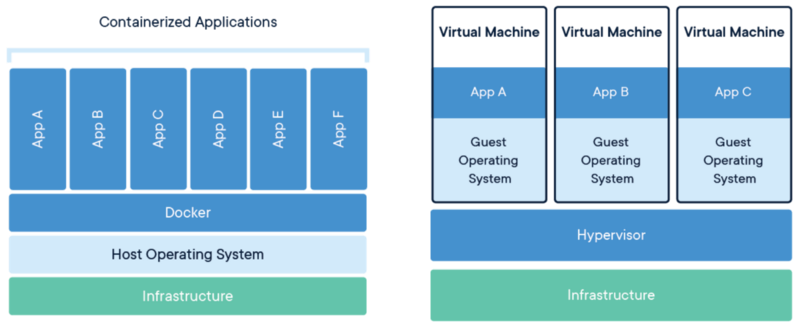

Docker is an open-source project for packaging any application as self-sufficient, independently deployable singular units, aka containers, that can be run on the cloud or desktop or any other platform. The idea is that the application runs on a completely isolated environment running on top of the Docker Engine which runs on top of the Host operating system. The isolated applications look somewhat like below, in contrast with traditional virtualization software:

2. Installing Docker

Where do we start?

First things first, let’s download and install the appropriate Docker CE (Community Edition) for your operating system from https://docs.docker.com/install/#supported-platforms

Verifying that Docker is working

Just run docker run hello-world . It’s working if you see the following output:

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

1b930d010525: Pull complete

Digest: sha256:2557e3c07ed1e38f26e389462d03ed943586f744621577a99efb77324b0fe535

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the “hello-world” image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

[https://hub.docker.com/](https://hub.docker.com/)

For more examples and ideas, visit:

[https://docs.docker.com/get-started/](https://docs.docker.com/get-started/)

Congratulations!

You now have a working docker installation on your machine.

3. Packaging an application

Now that we have installed docker on our system, let’s explore how to package an application using Docker.

For the sake of simplicity, let’s take the example of a very simple flask application that just prints out the request method being called.

Create a folder called docker-demo in your workspace.

Now create a file called app.py with the following contents:

| from flask import Flask, request | |

| app = Flask(__name__) | |

| @app.route('/hello') | |

| def api_hello(): | |

| if 'name' in request.args: | |

| return 'Hello ' + request.args['name'] | |

| else: | |

| return 'Hello John Doe' | |

| if __name__ == '__main__': | |

| app.run() |

Let’s create a requirements.txt file for the package dependencies in the same docker-demo folder.

| Flask==1.1.1 | |

| gunicorn==19.9.0 |

Now that we have the python application and also the list of dependencies ready, let’s work on packaging the application.

A Dockerfile has all the steps required for an application to be built as a Docker image. In the same docker-demo folder, let’s create a file called Dockerfile with the following contents:

| FROM python:latest | |

| COPY requirements.txt /usr/src/code/ | |

| WORKDIR /usr/src/code | |

| RUN pip install -r requirements.txt | |

| COPY . /usr/src/code/ | |

| CMD gunicorn --bind 0.0.0.0:8000 app:app |

Let’s take a closer look at each of the lines in the Dockerfile :

Line 1:

FROM python:latest

This line tells the docker engine that it has to take the latest available python image as the base for our application image.

Line 2:

COPY requirements.txt /usr/src/code/

This line tells that the docker engine should copy the file requirements.txt from our current project folder to the path /usr/src/code/ on the final image

Line 3:

WORKDIR /usr/src/code/

This line tells that our current working directory when in the image should be /usr/src/code/

Line 4:

RUN pip install -r requirements.txt

This line makes sure our application dependencies are installed.

Line 5:

COPY . /usr/src/code/

This line tells to copy the contents of our current project folder to the folder /usr/src/code/ on the docker image.

Line 6:

CMD gunicorn --bind 0.0.0.0:8000 app:app

This line defines the default command to be run when the final docker image is run.

Now that we have some clarity about what is written in the Dockerfile, let’s try to build the application.

Run the following command on the shell:

docker build . -f Dockerfile -t docker-demo

This command basically says run the Dockerfile with the context of our current folder . and tag the resulting image with the name docker-demo .

You should see the following output if the image build is successful:

docker-demo$ docker build . -f Dockerfile -t docker-demo

Sending build context to Docker daemon 1.47MB

Step 1/6 : FROM python:latest

---> 4c0fd7901be8

Step 2/6 : COPY requirements.txt /usr/src/code/

---> Using cache

---> 0ae7f5e0b8f1

Step 3/6 : WORKDIR /usr/src/code

---> Using cache

---> 758680b1755e

Step 4/6 : RUN pip install -r requirements.txt

---> Using cache

---> a4d56393776b

Step 5/6 : COPY . /usr/src/code/

---> e37d0a98dcd1

Step 6/6 : CMD gunicorn --bind 0.0.0.0:8000 app:app

---> Running in 4e30f7d4bb82

Removing intermediate container 4e30f7d4bb82

---> 041dbd6f6add

Successfully built 041dbd6f6add

Successfully tagged docker-demo:latest

4. Running a Docker Image

Run the following command to run the application that is now packaged as a docker image:

docker run -p 8000:8000 docker-demo

We have exposed the application running port 8000 in the container to the external port 8000 using the -p (publish) directive.

Once successfully run, you’ll see the following output:

[2019-07-09 20:43:15 +0000] [6] [INFO] Starting gunicorn 19.9.0

[2019-07-09 20:43:15 +0000] [6] [INFO] Listening at: [http://0.0.0.0:8000](http://0.0.0.0:8000) (6)

[2019-07-09 20:43:15 +0000] [6] [INFO] Using worker: sync

[2019-07-09 20:43:15 +0000] [9] [INFO] Booting worker with pid: 9

You can test the application URL using curl from the host machine.

docker-demo$ curl 'http://localhost:8000/hello?name=Kishan'

Hello Kishan

docker-demo$ curl 'http://localhost:8000/hello'

Hello John Doe

You have now run an application using Docker!

5. Configuring a Docker-Based Application

Now that we’ve built it, let’s consider the real-world scenario of configuring an application. Usually, any application will have a reasonable default and then you override it in each environment as per your needs.

There are two types of configurations used by applications:

- Environment variables

- File-based config for processes

Let’s make a change in the application so that it does something different based on the configuration.

A. Environment variable based config

Let’s assume your application has to do something different based on the current environment. For the sake of simplicity, I’ve just edited the previous Flask app we had to just print out extra information about the current environment:

| from flask import Flask, request | |

| import os | |

| app = Flask(__name__) | |

| @app.route('/hello') | |

| def api_hello(): | |

| env = os.environ.get("env", "prod") | |

| name = request.args.get("name", "John Doe") | |

| return f"Hello {name} from '{env}'" | |

| if __name__ == '__main__': | |

| app.run() |

Here, the application is configured to use the environment variable env while sending out the response. If it doesn’t get any value in env , it defaults to prod.

Now let’s make some changes in the Dockerfile as well:

| FROM python:latest | |

| COPY requirements.txt /usr/src/code/ | |

| WORKDIR /usr/src/code | |

| RUN pip install -r requirements.txt | |

| COPY . /usr/src/code/ | |

| ENV env prod | |

| CMD gunicorn --bind 0.0.0.0:8000 app:app |

You might notice this additional line: ENV env prod

This essentially injects an environment variable env with the value prod to the application runtime once this image is built.

Let’s try this out now:

docker build . -t docker-demo:env

We have tagged this new image as docker-image:env.

You may want to quit your existing older container using CTRL^C before running this container, in case you haven’t done that already.

Let’s run this new image:

docker-demo$ docker run --rm --name docker-demo -p 8000:8000 docker-demo:env

[2019-07-13 12:29:24 +0000] [6] [INFO] Starting gunicorn 19.9.0

[2019-07-13 12:29:24 +0000] [6] [INFO] Listening at: http://0.0.0.0:8000 (6)

[2019-07-13 12:29:24 +0000] [6] [INFO] Using worker: sync

[2019-07-13 12:29:24 +0000] [9] [INFO] Booting worker with pid: 9

You might notice two new options:--rm and --name

--rm tells docker engine to delete this container once it exits.

—-name tells to name the container as docker-demo

Let’s make an API call.

docker-demo$ curl 'http://localhost:8000/hello?name=Kishan'

Hello Kishan from 'prod'

Now, we see that the application is using the configured environment from the Dockerfile. How about we verify that?

Let’s login to the running container using bash

docker-demo$ docker exec -it docker-demo bash

root@6160a487196c:/usr/src/code# echo $env

prod

root@6160a487196c:/usr/src/code#

Voila!

Now, let’s just say I’m in a different environment named dev because I encountered an error and would like to reproduce it. How should I go about overriding the env config for a different environment? Let’s try to change the environment variable env during the docker container startup:

docker-demo$ docker run --rm --name docker-demo -e env=dev -p 8000:8000 docker-demo:env

[2019-07-13 12:29:24 +0000] [6] [INFO] Starting gunicorn 19.9.0

[2019-07-13 12:29:24 +0000] [6] [INFO] Listening at: http://0.0.0.0:8000

[2019-07-13 12:29:24 +0000] [6] [INFO] Using worker: sync

[2019-07-13 12:29:24 +0000] [9] [INFO] Booting worker with pid: 9

Is it really that easy? How about we make an API call?

docker-demo$ curl http://localhost:8000/hello?name=Kishan%27

Hello Kishan from 'dev'

Food for thought: If you notice, we have built the application only once. But, we had overridden the variable for configuration and thereby made it run under a different environment.

b. File-based config

As always, things are never as simple you might think they might be. Not everything can be solved using environment variables. You might end up writing config files for stuff like uwsgi, supervisor, gunicorn, nginx, etc. whose configuration simply cannot be put in an environment variable.

For simplicity’s sake, let’s configure gunicorn’s host and port binding configuration.

gunicorn accepts any valid python file as a configuration.

Let’s create a new file named configuration.py with the following contents:

bind = "0.0.0.0:8000"

Let’s change the last line of our Dockerfile to support our new configuration.

From:

CMD gunicorn --bind 0.0.0.0:8000 app:app

To:

CMD gunicorn -c configuration.py app:app

Let’s build the image and give it a new tag.

docker build . -t docker-demo:config

Now let’s run the application:

docker-demo$ docker run --rm --name docker-demo -p 8000:8000 docker-demo:config

[2019-07-13 13:33:09 +0000] [6] [INFO] Starting gunicorn 19.9.0

[2019-07-13 13:33:09 +0000] [6] [INFO] Listening at: http://0.0.0.0:8000

[2019-07-13 13:33:09 +0000] [6] [INFO] Using worker: sync

[2019-07-13 13:33:09 +0000] [9] [INFO] Booting worker with pid: 9

We can see that the application has successfully taken the config from the configuration.py file and bound it on 0.0.0.0:8000.

Let’s talk about overriding this.

In case of an environment variable, we could use the -e switch to override the existing environment in the application container. How do we do this for a file?

Docker volumes are one way to put persistence into application containers through exposing host directories/files into the containers. Containers by default do not have any persistence built-in, as they are supposed to be lightweight, isolated and easy to tear down and rebuild. Docker volumes are an excellent way to expose configurations, logs, etc. to/from the container.

In our scenario, what we need is to change the content of configuration.py and then use this new config while running the image without having to build the docker image again.

Let’s create a file called configuration2.py with the contents as:

bind = “0.0.0.0:9000”

We are essentially changing the binding configuration of gunicorn from port 8000 to 9000 here.

Let’s kill the previous container and start a new one with the following command:

docker-demo$ docker run --rm --name docker-demo -v \`pwd\`/configuration2.py:/usr/src/code/configuration.py -p 8000:9000 docker-demo:config

[2019-07-13 13:52:30 +0000] [7] [INFO] Starting gunicorn 19.9.0

[2019-07-13 13:52:30 +0000] [7] [INFO] Listening at: http://0.0.0.0:9000

[2019-07-13 13:52:30 +0000] [7] [INFO] Using worker: sync

[2019-07-13 13:52:30 +0000] [10] [INFO] Booting worker with pid: 10

We used the -v switch to create a volume of the file configuration2.py on the host and then mounted it over the file configuration.py in the image thereby replacing its contents with that of configuration2.py. As you can see, the application is now running on the port 9000 as has been written in the new configuration.

If you notice again, we have overridden the configuration without having to rebuild the application.

Let’s make that API call:

docker-demo$ curl http://localhost:8000/hello?name=Kishan%27

Hello Kishan from 'prod'

And that concludes our tutorial!

What we have accomplished in this tutorial:

- Wrote a REST application.

- Wrote the dependencies for the application.

- Wrote the Dockerfile spec for the application.

- Built the application using the Dockerfile and tagged the image with a name.

- Ran the docker image and tested the URL endpoint.

- Configured the application using environment variables and config files

- Overrode the configurations of environment variables and config file at runtime without rebuilding the image. (aka Build once, run anywhere)

For further reading, please take a look at the following links:

Conclusion

Congratulations on finishing this tutorial!

The code used in this blog post can be found here. The commit history is in line with the examples we’ve covered. I’d like to convey my special thanks to Lalit and Nihar for proof-reading my ramblings and giving good inputs as to what this tutorial should cover.

This article is part of the Learning Docker series.

- 1 - A Beginner’s Guide to Docker (This Article)

- 2 - Understanding Docker Layers